The continuing development of artificial intelligence requires improved neural network models, which have become more challenging to develop. Through Neural Architecture Search (NAS), automatic operation replaces traditional human-made deep learning model design to achieve optimal results. Through optimization algorithm implementations, NAS identifies architecture designs that manually designed teams fail to achieve in terms of accuracy as well as efficiency and performance outcomes.

NAS represents a remarkable breakthrough through automated deep learning model design which decreases dependency on human involvement. Using optimization algorithms enables NAS to find innovative network architectures which deliver superior accuracy combined with more efficient performance than models constructed by humans.

Here we examine Neural Architecture Search from its core principles through its key applications as well as its transformative potential in AI advancements.

What is Neural Architecture Search?

The automated method behind Neural Architecture Search delivers the optimal neural network design suitable for any given task. The traditional practice of designing models demands numerous unsuccessful attempts because experts must determine appropriate structures with parameters. NAS accomplishes automated neural architecture selection by performing exhaustive searches through architecture alternatives to identify structures which best match pre-defined performance metrics.

Autonomous algorithms through Neural Architecture Search discover the optimal neural network design for specific tasks. Typical model design requires extensive testing and significant human experience for choosing correct layers alongside connection structures and hyperparameter values. Structured searches in the vast architecture space enables NAS to automatically pick the most effective network design according to predefined performance specifications like accuracy and latency with computational efficiency.

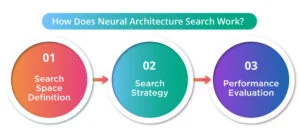

How Does Neural Architecture Search Work?

NAS follows a structured process to identify the best model architecture:

1. Search Space Definition – It defines the range of possible neural network configurations, including layer types, connections, and activation functions.

2. Search Strategy – NAS employs algorithms such as reinforcement learning, evolutionary algorithms, or gradient-based methods to explore different architectures.

3. Performance Evaluation – It assesses the generated models using validation data, refining the search to improve accuracy and efficiency.

By automating this process, NAS reduces the time and resources required to develop cutting-edge AI models.

The Need for Neural Architecture Search & Challenges in Traditional Model Design

Neural network development through human tunnelling proves difficult because it demands complete accessibility to AI and machine learning fundamentals. Some of the challenges include:

- Trial-and-Error Approach – Finding an optimal design requires engineers to test numerous architectural variations through their experiments.

- High Computational Cost – Excessive processing costs arise from testing various models during their comparison process.

- Scalability Issues – For advanced AI applications working with extensive data sets manual model design has become a burdensome process and lacks scalability.

Advantages of Neural Architecture Search

By automating the design process, NAS provides several advantages:

- Improved productivity and speed – The NAS technology increases development speed thus shortening product deployment times for AI applications.

- Enhanced productivity and effectiveness – The system discovers efficient structures that offer better metric performance while requiring reduced computational power.

- Flexibility and quick adjustment – The NAS system produces customized models following unique requirements including recognition of visual data and operation with natural languages.

An expanding number of industries (holder advanced artificial intelligence technology because NAS allows them to control how deep learning models receive data access and achieve performance efficiency.

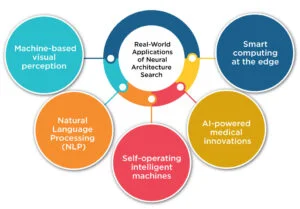

Real-World Applications of Neural Architecture Search

1. Machine-based visual perception

Through NAS the performance of computer vision models has dramatically advanced allowing improved results for classification and detection along with facial recognition applications. Research shows that the visual processing capabilities of leading AI frameworks AutoML produced by Google and NAS created using PyTorch from Facebook outperform existing models.

2. Natural Language Processing (NLP)

Modern transformer-based architecture systems together with NLP models have received enhanced benefits from NAS. NAS optimizes architecture patterns for three main text processing applications: language translation and sentiment analysis and text generation to advance virtual assistants and chatbots powered by AI.

3. Self-operating intelligent machines

Self-driving vehicles and robotic systems require real-time decision capabilities which neural network optimization enhances perception and controllers’ operation. With NAS organizations produce both strong and lightweight neural models which boost navigation capabilities as well as improve obstacle recognition and control systems for motion planning.

4. AI-powered medical innovations

The Neural Architecture Search method enhances deep learning models used for medical images during disease diagnosis and therapeutic evaluation. NAS contributes to fast drug discovery by discovering therapeutic molecular structures efficiently.

5. Smart computing at the edge

NAS presents a method to create performant AI systems suitable for operation on edge platforms such as smartphones together with smart cameras and IoT sensors. NAS enables operations of powerful AI applications through reduced model complexity and maintained operational performance within low-power deployment settings.

The Future of Neural Architecture Search

1. Improving Efficiency with Meta-Learning

Upcoming NAS systems will integrate meta-learning capabilities to let their models adapt architecture research practices according to previous outcomes. The future implementation of NAS will create more efficient processes which will lower computational requirements to expand NAS accessibility.

2. Improving model transparency and understanding

Understanding the performance factors behind different architectural choices stands as one main obstacle during the NAS process. Future research priorities the development of interpretive capabilities for NAS-generated architectures which enables developers to examine the decisions that NAS models make in their design process.

3. Making NAS accessible to all

NAS remains an expensive process which modern-day tech companies exclusively deploy using their extensive high-performance computing systems. The coming advancements will reduce NAS computational complexity and expand accessibility for both professional and academic research facilities.

4. Combining multiple NAS techniques

Neural Architecture Search systems merge efficiently with manual expert guidance to create hybrid methods that join automation with human intuition. The approach results in better designs which match specific real-world problems more effectively.

5. Integration with Quantum Computing

With progress in quantum computing research NAS application benefits from improved optimization algorithms which let it inspect bigger search areas more efficiently. The new development will transform artificial intelligence model creation, and it may lead to ground-breaking results for climate prediction systems and more complex materials scientific applications.

Future advancements in NAS

Neural Architecture Search represents an automated system which discovers optimized architectures without requiring extensive human involvement in model design. Automatic model designs through NAS eliminate restrictions of manual design methods while ensuring higher performance levels across diverse applications in healthcare together with autonomous systems.

The evolution of AI depends heavily on NAS systems to direct the development of machine learning aesthetics. Through better meta-learning approaches alongside enhanced interpretability and hybrid design methods NAS will open AI model development to a wider range of researchers and industries globally. Embracing Neural Architecture Search enables the following transformation in AI by developing intelligent systems that combine speed with efficiency alongside an adaptable capability.

Read More:

Understanding Federated Learning in AI Models and Its Advantages